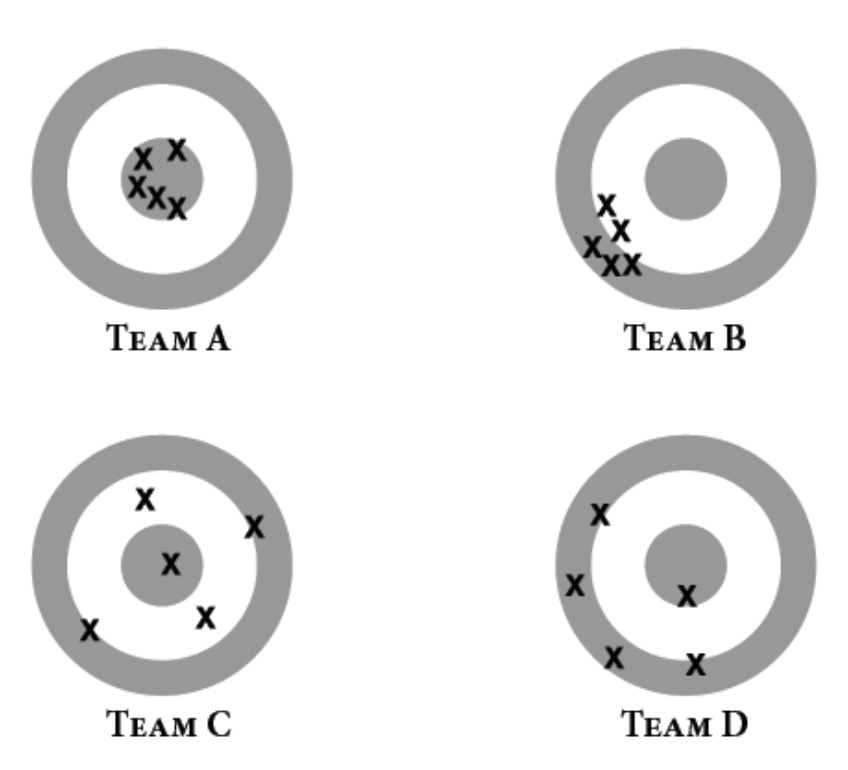

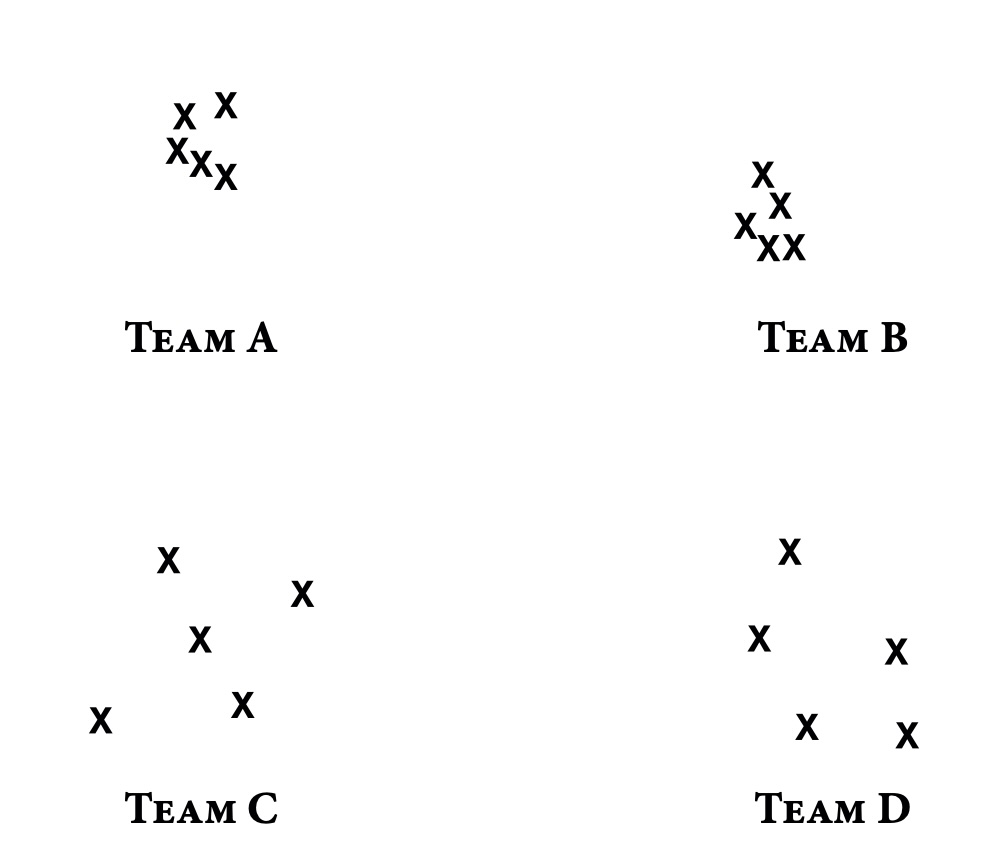

In their book “Noise: A Flaw in Human Judgment”, Kahneman, Sibony, and Sunstein (a.k.a. the psychology Avengers) share a super helpful way to understand the difference between noise and bias. Imagine a shooting target and four different teams, each with five people taking shots. The results? Shown below:

So what’s the deal here?

- Bias = how far the average shot is from the bullseye.

- Noise = how spread out the shots are from each other within a team.

Here’s the breakdown:

- Team A: Slight bias, low noise (pretty good!)

- Team B: Strong bias, low noise (consistently wrong—yikes)

- Team C: Slight bias, high noise (some hits, some wild misses)

- Team D: Strong bias, high noise (total chaos)

Now, let’s talk AI. Just like these shooters, AI models—especially those used as assistants—can also have both bias and noise.

Noise

Let’s start with noise. For a single AI model, noise is how much the answers vary when you ask the same question multiple times. Sometimes it’s poetic and wise, sometimes it’s… weirdly obsessed with pancakes.

You can actually tweak this noise level with knobs like temperature, top-k, and top-p—kind of like adjusting the spice level on your takeout order. Want something consistent and grounded? Dial it down. Want creative or unexpected? Crank it up.

Now, between different AI models (say, from OpenAI vs. Google vs. Anthropic), noise shows up when they give different answers to the same question. That’s because they’ve all been trained on different data, with different priorities, by different teams of engineers and researchers. So yeah, they don’t always agree—just like your group chat when deciding on dinner.

Bias

Now here’s where it gets trickier. While noise can be tuned or reduced, bias in AI is tougher to fix. It’s baked into the training data, model architecture, fine-tuning choices, and so on.

One infamous example: when Google first launched Gemini, it had an embarrassing moment (“Google Chatbot’s A.I. Images Put People of Color in Nazi-Era Uniforms.”). Users noticed that when asked to generate an image of a Nazi soldier, the model often produced images featuring people of color—clearly a mismatch from historical accuracy. Thankfully, Google acted quickly and corrected it, but it was a reminder: bias is real and not always easy to spot right away.

When is bias easy to catch? When the truth is clear and well-known. Like, if an AI says the Eiffel Tower is 10 feet tall, you can confidently call it out. (Unless it’s describing a souvenir.)

But here’s the real puzzle: What about questions where the truth isn’t obvious—or maybe even unknowable? Like “What is consciousness?” or “Is the universe infinite?” Now we’re on the other side of the target paper.

In this scenario, we can’t actually tell who’s biased. All we can really see is the noise. We might notice that some teams/models are all over the place, and some are more consistent—but we can’t say for sure which one is actually hitting the truth.

Here’s why that’s useful:

- If all the models give similar answers, that boosts your confidence. Maybe they’re on to something.

- If the answers vary wildly, that’s still helpful. You get a spectrum of ideas to explore, and sometimes the best insight is hidden in the outlier.

It’s a bit like crowd wisdom: combining different perspectives can actually lead to better decisions—even if some of the contributors are a little off.

Of course, even when everyone agrees, there’s still a chance they’re all wrong in the same way (hello, Team B). But when you see diverse, independent models arriving at similar conclusions, it gives you a pretty solid reason to trust the result.

So yeah, Council AI is like your personal brain trust, pulling insights from the brightest AI minds out there. It’s available now on iOS—go ahead, download it and give it a spin. Ask it something fun. Or deep. Or weird.

Let the council speak!

Leave a Reply